多人姿态识别框架-AlphaPose学习记录

因为我的毕设是基于视觉的步态识别,然后当时看到AlphaPose 这个框架,局的还挺有意思的

多人姿态识别简介

人体姿态估计有两个主流方案:Two-step framework 和Part-based framework。第一种方案是检测环境中的每一个人体检测框,然后独立地去检测每一个人体区域的姿态(自顶向下的方法)。第二种方案是首先检测出环境中的所有肢体节点,然后进行拼接得到多人的骨架(自底向上的方法)。第一种方案,姿态检测准确度高度以来目标区域框检测的质量。第二种方案,如果两人离得十分近,容易出现模棱两可的情况,而且由于是依赖两个部件之间的关系,所以失去了对全局的信息获取。 AlphaPose采用自顶向下的方法,提出了RMPE(区域多人姿态检测)框架。

[github地址](git clone https://github.com/MVIG-SJTU/AlphaPose.git)

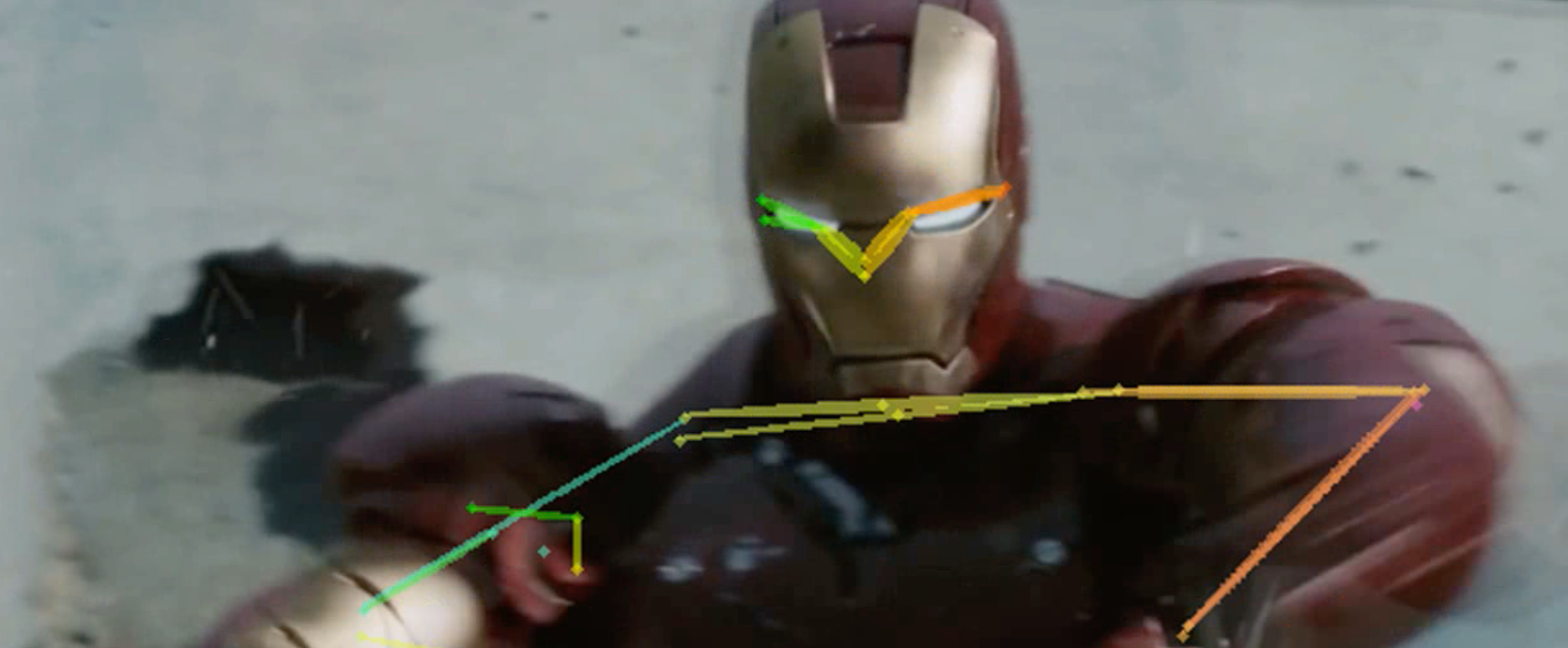

处理视频文件效果如下图

处理图片文件会生成含有关键点的JSON文件

[{"image_id": "demo\\1.jpg", "category_id": 1, "keypoints": [127.13093566894531, 192.38218688964844, 0.7934320569038391, 131.0470428466797, 184.54995727539062, 0.7785136699676514, 119.29869842529297, 188.46607971191406, 0.7877119779586792, 140.8373260498047, 184.54995727539062, 0.7160547971725464, 113.42453002929688, 192.38218688964844, 0.5955836176872253, 160.4178924560547, 210.0047149658203, 0.7830912470817566, 109.5084228515625, 217.83692932128906, 0.7417958974838257, 193.7048797607422, 229.5852813720703, 0.8513346314430237, 80.13756561279297, 245.24974060058594, 0.8397173285484314, 187.83070373535156, 262.87225341796875, 0.8223487734794617, 105.5923080444336, 274.62060546875, 0.8222940564155579, 164.33401489257812, 280.4947509765625, 0.6314892768859863, 131.0470428466797, 284.410888671875, 0.6777188777923584, 172.16624450683594, 337.2784423828125, 0.7752819657325745, 140.8373260498047, 341.1945495605469, 0.7747130990028381, 189.78875732421875, 390.14593505859375, 0.7158164978027344, 160.4178924560547, 392.10400390625, 0.7240789532661438], "score": 2.8172354698181152}, {"image_id": "demo\\1.jpg", "category_id": 1, "keypoints": [577.15087890625, 185.02479553222656, 0.8178495168685913, 583.4673461914062, 178.7082977294922, 0.8182868361473083, 572.9398803710938, 180.8137969970703, 0.8408921957015991, 593.9948120117188, 178.7082977294922, 0.699515163898468, 566.6234130859375, 182.91929626464844, 0.21849828958511353, 608.7333374023438, 206.07974243164062, 0.813751220703125, 572.9398803710938, 210.29074096679688, 0.7235016822814941, 623.4717407226562, 243.97866821289062, 0.8144767880439758, 568.7288818359375, 246.0841522216797, 0.6698101162910461, 617.1552734375, 262.9281311035156, 0.7532274127006531, 545.5684204101562, 262.9281311035156, 0.731727659702301, 600.3113403320312, 273.45562744140625, 0.6763455271720886, 575.0453491210938, 275.56109619140625, 0.7336585521697998, 583.4673461914062, 334.5149841308594, 0.767824113368988, 587.6783447265625, 336.6204833984375, 0.7423863410949707, 610.8388061523438, 397.6798400878906, 0.5873599648475647, 615.0498046875, 397.6798400878906, 0.5767690539360046], "score": 2.670726776123047}, ....]下面是我编写的python程序讲JSON文件中的关键点标记到图片当中

# # "image_id" : int, # 该对象所在图片的id # # "category_id" : int, # 类别id,每个对象对应一个类别 # # "keypoints" : [x1 ,y1 ,v1 ,...], # keypoints是一个长度为3*k的数组,其中k是category中keypoints的总数量 # # (也就是说k是类别定义的关键点总数,如图我们实验的人体姿态关键点的k为17,所以获得的keypoints总数为51)。 # # 每一个keypoint是一个长度为3的数组,第一和第二个元素分别是x和y坐标值,第三个元素是个标志位v。 # # "score" : int ,# 置信度 (一改) # # "box" : [x ,y ,width ,height], # x,y为左上角坐标,width,height为框的宽高 # # "idx" : [0.0] import json import cv2 import numpy as np # 读取照片 img = cv2.imread("1.jpg") with open('./alphapose-results.json','r',encoding='utf8')as fp: json_data = json.load(fp) for i in json_data: if(i['image_id']=="demo\\1.jpg"): list2 = np.array(i['keypoints']) list3 = list2.reshape(17,3) n = 0 for j in list3: cor_x = j[0] cor_y = j[1] cv2.circle(img, (int(cor_x), int(cor_y)), 3, (0, 255, 0), -1) # cv2.putText(img, f'{n}', (int(cor_x) +5, int(cor_y) +5), cv2.FONT_HERSHEY_PLAIN, 2, (255, 0, 0), thickness=1) n = n+1 print(n) ##打印关键点编号: # cv2.circle(img, (cor_x, cor_y), 3, p_color[n], -1) # cv2.circle()用于在任何图像上绘制圆。 # ima:所选的绘制圆的图像。 # (cor_x, cor_y):圆的中心坐标。坐标表示为两个值的元组,即(X坐标值,Y坐标值)。 # radius:3是圆的半径。 # p_color[n]:绘制的圆的边界线的颜色。对于BGR,我们通过一个元组。例如:(255,0,0)为蓝色。 # thickness:圆边界线的粗细像素。厚度-1像素将以指定的颜色填充矩形形状。 # 显示图片 cv2.imshow("Dome", img) # 窗体名字,照片 #等待显示 cv2.waitKey(0) cv2.destroyAllWindows()效果如下图